NPU (Neural Processing Unit) is a processor specialized for AI processing, designed to handle deep learning and machine learning algorithms quickly and efficiently.

In recent years, AI technology has rapidly become a part of daily life. From facial recognition on smartphones and obstacle detection in self-driving cars to factory automation and smart home appliances, AI is being used in many fields. One of the core technologies supporting AI processing is the “NPU”.

NPUs are processors dedicated to AI, enabling high-speed and efficient processing of AI algorithms. Thanks to their specialization, they achieve processing power and power efficiency that traditional CPUs and GPUs find difficult, greatly advancing the practical use of AI.

This article focuses on the unsung hero supporting the AI society, the “NPU,” and explains its features, differences from CPUs and GPUs, and real-world use cases in an easy-to-understand way.

- NPU is a highly efficient processor specialized for AI processing

- Used in various AI applications such as smartphones and self-driving cars

- Enables faster processing and lower power consumption than CPU/GPU

- Specialized for AI inference processing, not for training

This article also explains basic knowledge such as how to read CPU manufacturers and model numbers, performance indicators, and how to choose a CPU from the perspective of performance and compatibility.

≫ Related article: How to Choose a DIY PC CPU [Performance / Features / Compatibility]

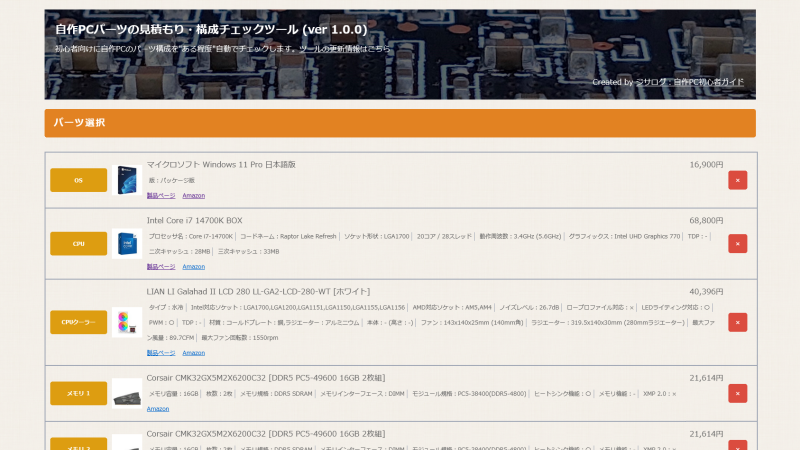

Select PC parts and online stores to instantly generate an estimate, check compatibility, and calculate power requirements. You can save up to five different builds, making it easy to try out multiple configurations.

≫ Tool:PC Parts Estimation & Compatibility Check Tool

Table of Contents

What is an NPU?

First, let’s explain the basic knowledge of NPUs and the differences between CPUs and GPUs.

A Processor Specialized for AI Processing

NPU (Neural Processing Unit) is a processor specialized for AI processing, designed to efficiently perform calculations for artificial intelligence (AI).

NPUs can execute AI tasks such as deep learning and machine learning at high speed, and are especially effective in tasks like image recognition and voice recognition.

Compared to traditional CPUs and GPUs, NPUs have an architecture specialized for AI calculations, offering high power efficiency and the ability to process large amounts of data with less energy.

Thanks to this, AI is being used in various fields such as smartphones, self-driving cars, and IoT devices.

The Role and Importance of NPUs

With AI technology rapidly spreading into daily life, the role and importance of NPUs are increasing.

AI is being used in all kinds of fields, from obstacle detection in self-driving cars and factory automation to smart home appliances.

For personal use, examples include facial recognition on smartphones, generative AI like ChatGPT, and assistant AI built into smartphones and computers.

With ChatGPT, the actual processing is done on the server side, so devices like smartphones and computers do not process AI tasks themselves. However, for the following reasons, there is a growing need for AI processing on the device side as well.

- Privacy Protection

Data such as personal voice, images, and operation history can be processed without sending it to the cloud. This is important for companies handling confidential information and for personal data protection. - No Need for Communication / Works Offline

AI can function without an internet connection (e.g., voice recognition, image classification). It can be used stably even in areas with weak signals or while moving. - Low Latency and Real-Time Processing

Since processing is done on the device, response is faster than via the cloud. Examples include background blur in video calls, instant response from voice assistants, and real-time subtitles. - Reduced Communication Costs

No need to communicate with the cloud every time, saving mobile data and communication fees. This also greatly reduces costs for service providers. - Reduced Server Load / Improved Scalability

If inference processing for each user is completed on the device, cloud resource consumption decreases. This makes large-scale deployment easier for service providers.

For these reasons, being able to efficiently process AI on the device side is essential for further AI adoption in the future.

Compared to traditional CPUs and GPUs, NPUs are optimized for neural network calculations commonly used in AI processing, and are especially good at deep learning inference tasks.

Inference processing means using a trained AI model to make predictions or classifications on input data.

To use AI, it is first necessary to perform “training” using a large amount of data to create a trained AI model.

However, this training process requires extremely high computational performance (*), so NPUs installed in smartphones and computers cannot handle it.

Therefore, NPUs are processors specialized for inference processing using trained models.

* Extremely high computational performance means using thousands to tens of thousands of high-performance GPUs costing 2 to 3 million yen each (the typical scale for corporate AI training).

![]() Ken

Ken

Thanks to this, it is possible to use AI functions in real time on various devices such as smartphones, self-driving cars, and smart home devices.

Difference Between NPU and CPU

Both NPUs and CPUs are computer processors, but their roles and design philosophies are very different.

CPUs are general-purpose processors for performing general calculations and are good at executing program instructions in order.

They can handle a wide range of tasks, such as OS management and running applications, but for specific calculations like AI, they are not as efficient as NPUs.

On the other hand, NPUs are dedicated processors designed to efficiently handle AI tasks.

They are specialized for neural network calculations used in various AI applications and can process large amounts of data in parallel.

For example, think of a person who can do many jobs at an average level (CPU) and a person who is extremely fast and high-quality at a specific job (NPU). Both have advantages and disadvantages, and it is easy to understand if you imagine the right person for the right job.

With NPUs, AI model inference can be performed quickly, and performance improvements are expected especially in fields such as image recognition and natural language processing (voice assistants, machine translation, chatbots).

NPUs are often built into CPUs, but in reality, they are designed as separate units from the CPU.

On official pages for Intel and AMD CPUs, NPUs are listed in the specifications, so they may be considered part of the CPU.

Physically, they are inside the CPU chip, but as computational units, CPUs and NPUs are different.

In this way, NPUs and CPUs play different roles in their respective areas of expertise, and as AI evolves, the importance of NPUs is increasing.

Difference Between NPU and GPU

Like CPUs, NPUs and GPUs also have very different roles and design philosophies.

GPUs were originally developed for graphics rendering.

They have many processing units (cores) and are very strong at simultaneous parallel processing, such as image processing and video rendering.

This parallel processing feature matches well with the large-scale matrix calculations required for AI training and inference, so GPUs are now widely used for AI purposes.

GPUs are flexible and can be used for not only AI but also scientific computing, simulations, and video editing as general-purpose parallel processors.

On the other hand, NPUs are dedicated processors specialized for neural network inference in AI processing.

They do not have the versatility of GPUs, but for specific AI processing (inference), they are optimized for even faster and more power-efficient execution.

Especially in low-power devices such as smartphones and laptops, NPUs can use AI functions more efficiently than GPUs.

Benefits of NPUs

Let’s explain the benefits of having an NPU.

Faster AI Processing and Power Saving

Compared to regular CPUs and GPUs, NPUs are optimized for neural network calculations and have high parallel processing capabilities, so they can process large amounts of data quickly.

This makes real-time data processing and the use of more complex models possible, improving AI response speed.

Furthermore, NPUs are highly power efficient, so even when performing the same calculations as CPUs or GPUs, they can reduce power consumption.

For this reason, NPUs are increasingly used in mobile devices and embedded systems where battery life is important, as they can maintain high performance while saving power.

Reducing CPU and GPU Load

Because NPUs are designed specifically for AI processing, they can reduce the load on CPUs and GPUs.

Normally, CPUs and GPUs are designed for general-purpose calculations, but AI processing requires a lot of matrix and vector calculations.

These calculations are what NPUs excel at, and having an NPU allows CPUs and GPUs to focus on other tasks, improving overall system efficiency.

Complete Local Processing (Privacy / Security / No Need for Internet)

NPUs process data directly on the device, so there is no need to send data to the cloud.

By completing processing locally, input data is not sent to the server, so privacy is protected and security risks are reduced.

They also work stably even in environments with unstable internet connections.

There are concerns about the possibility of input data such as personal information or company secrets being seen, but if processing is done locally, there is basically no need to send input data to the server.

However, it is not correct to think that using an NPU and processing locally is 100% safe.

This is because, depending on the application design, it is possible to send data to the server before or after local NPU processing.

In other words, the situation has changed from “input data is inevitably sent to the server because processing is done on the server” to “if desired, input data can be sent to the server even if processed locally“.

Depending on the service provider or application design, input data may still be sent, so in that sense, privacy and security risks cannot be said to be 100% safe.

Therefore, from the perspective of privacy and security risks, regardless of whether AI processing is done locally with an NPU, always check the terms of use to see if input data is being collected, and if you don’t trust the provider even if they say they don’t use it, consider not using the service.

Also, because there is no dependence on the server, it works stably even in environments with unstable internet connections.

By eliminating the need to send and receive data, processing speed increases and real-time response becomes possible.

[For Businesses] Reducing Server Resources, Communication, and Power Costs

For AI services that process data via servers, businesses incur server resource, communication, and power costs.

For example, the well-known generative AI “ChatGPT” is used by about 400 million people worldwide. To handle this huge number of requests, a large number of high-performance servers are needed, and every time the server is accessed for processing, communication and power costs are incurred.

If NPUs are installed and AI processing can be done locally, these costs can be greatly reduced.

Also, by reducing or eliminating server-side processing, scalability improves.

Scalability means the ability of a system or service to efficiently expand its performance or scale in response to increased load or demand.

In other words, it becomes easier to handle 10 or 100 times more users, and resources can be flexibly increased as needed.

Disadvantages of NPUs

Let’s explain the disadvantages of having an NPU.

[For Businesses] Application Support is Required

This is mainly a disadvantage for businesses rather than users: application support is required.

NPUs are designed to process specific calculations quickly, but dedicated applications or drivers are needed to maximize their performance.

Unlike general-purpose CPUs and GPUs, NPUs are specialized for neural network processing, so existing apps may not work properly as they are.

Developers need to create apps optimized for NPUs, which requires extra time and effort.

Also, to use NPUs effectively, developers need to understand the NPU architecture and characteristics and program accordingly.

On the app side, it is necessary to determine whether the processing is suitable for the NPU and whether the computer has an NPU, and then assign the processing to the NPU.

Thus, when introducing NPUs, it is necessary to consider not only the hardware but also app support.

From a user perspective, just because you start using a computer with an NPU does not mean that AI-suitable processing will automatically be handled by the NPU.

Also, if there is no NPU or the app does not support it, processing will be done by the CPU as before, so there is no need to worry.

You may feel a bit disappointed that “even though I’m using a computer with an NPU, the app doesn’t support it, so processing isn’t efficient…” but the app won’t stop working or become unstable.

In that case, there is a possibility that future updates will add NPU support, so look forward to it.

Specific Examples of Tasks NPUs Excel At

We’ve explained the roles and benefits of NPUs, but let’s look at what kinds of tasks NPUs are good at.

In the explanations, specific application names or expressions that make you think “this feature is from that app…” may be included, but these are only “examples of tasks suitable for NPUs.”

Whether the actual application or feature supports NPUs is not guaranteed.

Please read with the understanding that “these types of tasks are technically a good match for NPUs.”

Real-Time AI Processing in Video Conferencing

NPUs excel at processing large amounts of data in parallel in real time, making them strong in AI tasks involving video and audio processing, such as video conferencing.

Background Blur and Virtual Background Composition

In video conferencing, features that detect participants’ faces and blur or replace the background are often used.

These processes require real-time identification and separation of people and backgrounds, with different processing applied to each, based on neural network image recognition.

Such high-load image inference processing is an area where NPUs excel, and they can execute it with lower latency and power consumption than CPUs or GPUs, enabling a comfortable experience even on battery-powered laptops.

Voice Noise Cancellation and Echo Removal

Noise cancellation and echo removal, which suppress ambient noise or remove echoes in real time, often use machine learning and can be efficiently executed by NPUs.

Active Noise Cancelling (ANC) picks up environmental sounds with a microphone and generates sound waves to cancel them out, which can also be achieved by NPUs calculating in real time.

Automatic Face Framing and Gaze Correction

NPUs are also good at real-time face recognition and tracking in camera images.

For example, “automatic face framing” is a technology that automatically adjusts the frame so that the subject stays in the center even when moving, requiring continuous face recognition and position prediction.

“Gaze correction” makes it look like the user is looking at the camera even when looking at the screen. This also requires understanding and correcting the image structure, and deep learning-based processing is smoothly executed by NPUs.

Processing for Windows Copilot and AI Assistants

Features like Windows Copilot and AI assistants need to process user voice input and natural language instructions in real time.

These processes include complex AI tasks such as voice recognition, natural language understanding, and response generation, and NPUs can efficiently execute the inference processing.

NPUs are specialized for neural network inference processing and can execute faster and with lower power consumption than CPUs or GPUs.

This makes the following AI assistant operations more comfortable:

- Wake word detection such as “OK, Google!”

- Semantic analysis of questions and generation of answer candidates

- Real-time execution of operation commands (e.g., “What’s my schedule for tomorrow?”)

Furthermore, because processing can be completed on the device, communication delays are reduced, making for a smoother AI experience.

Also, for smartphones and laptops that require battery operation, the presence of NPUs that maintain high performance while saving power is important.

In this way, NPUs are very suitable for “continuous and lightweight AI processing” such as Windows Copilot and AI assistants.

Automatic Camera Correction and Face Recognition

NPUs, as AI processors that efficiently perform real-time image processing, greatly contribute to the advancement of camera functions.

Especially in devices with photo and video functions such as smartphones, NPUs handle many AI processes behind the scenes.

Automatic Correction: Achieving More Beautiful Photos in Real Time

When shooting, the camera automatically adjusts brightness, contrast, white balance, and skin tone.

These processes used to be done with static algorithms, but now, thanks to NPUs, AI correction that analyzes and optimizes images in real time is possible.

- Recognize faces and scenes and adjust exposure optimally

- Adjust colors to match subjects such as sky or plants

- Automatically apply skin correction and beautification with AI

All of these are achieved by inference processing of neural network models, and NPUs execute them with low latency and power consumption.

Face Recognition: Enhancing the Shooting Experience with Fast, High-Precision Processing

Face recognition is also one of the tasks NPUs are particularly good at.

While shooting, the NPU analyzes the video in real time and quickly detects faces, enabling advanced shooting features that anyone can use easily:

- Automatically focus on faces

- Recognize multiple faces and optimize the overall composition

- Apply background blur or portrait mode naturally

Enhancing Security Features

NPUs, as processors specialized for AI processing, also play an important role in the security field.

Especially for real-time data analysis and anomaly detection, which were heavy loads for traditional processors, NPUs can execute efficiently.

Real-Time Analysis of Anomaly Detection and Unauthorized Access

Security systems need to monitor logs and communication data in real time and quickly detect unusual behavior.

NPUs can perform pattern recognition with machine learning quickly and with low power consumption, making it possible to run such anomaly detection algorithms on the device at all times.

This enables quick detection of signs of cyberattacks or malware behavior, preventing damage and enabling rapid response.

Achieving More Advanced and Robust Security Environments

With the introduction of NPUs, security systems evolve from simple “monitoring tools” to “AI-based defense systems that make real-time decisions and responses.”

This is especially important for endpoints (PCs, smartphones, etc.), as it means high-level protection can be provided without relying on servers.

Optimizing Battery Efficiency

By introducing NPUs, processing can be done more efficiently than with CPUs or GPUs, improving energy efficiency and making batteries last longer.

This is a feature of NPUs themselves, but there are also efforts to use AI with NPUs to “optimize battery usage itself (battery management systems).”

Instead of conventional fixed power management, dynamic, user-optimized management is being realized as follows:

- Learn user usage patterns and optimize background processing and notifications

AI learns which times and apps the user doesn’t use, and automatically suppresses unnecessary background activity and notifications, reducing wasted power consumption. - Automatically adjust power allocation to apps based on time of day and behavior patterns

Prioritize power for frequently used apps according to usage patterns in the morning, afternoon, and night, and switch others to power-saving mode for efficiency. - Optimize charging schedule based on battery temperature and charging history (extend battery life)

Avoid charging at high temperatures, or learn when the user starts using the device before charging is complete and stop at 80-90%, providing optimal charging control to prevent battery degradation.

These decisions and predictions are made by AI models, and NPUs are used for local inference.

Therefore, even if always running, power consumption is kept low, enabling a system that quietly boosts overall battery efficiency.

Automatic Translation and Subtitle Generation

AI tasks such as automatic translation and subtitle generation require advanced calculations to process large amounts of text and audio data in real time.

NPUs can execute processes such as voice recognition, natural language processing, and machine translation in parallel and at high speed, making them ideal for real-time automatic subtitle generation and translation features.

For example, transcribing spoken content in a video and instantly translating it into the user’s language for subtitles can be achieved with much lower latency and power consumption by NPUs compared to CPUs or GPUs.

Summary: NPU is the “Brain” of the AI Era

This article explained the features and roles of NPUs (Neural Processing Units), their differences from CPUs and GPUs, and the tasks NPUs excel at.

Here are the key points again:

- NPU is a highly efficient processor specialized for AI processing

- Used in various AI applications such as smartphones and self-driving cars

- Enables faster processing and lower power consumption than CPU/GPU

- Specialized for AI inference processing, not for training

NPUs function as the “brain” of various devices deeply involved in daily life, as processors specialized for AI processing.

NPUs, which can execute complex AI processing such as deep learning and machine learning quickly and with low power consumption, are built into CPUs for smartphones and computers, demonstrating their power in many fields.

Even so, in the ever-evolving AI society, NPUs will become an indispensable technology.

For this reason, CPU manufacturers are focusing on NPUs, so further performance improvements and more capabilities for devices are expected in the future.

Truly, NPUs are the brains that support the AI era.

This article also explains basic knowledge such as how to read CPU manufacturers and model numbers, performance indicators, and how to choose a CPU from the perspective of performance and compatibility.

≫ Related article: How to Choose a DIY PC CPU [Performance / Features / Compatibility]

Select PC parts and online stores to instantly generate an estimate, check compatibility, and calculate power requirements. You can save up to five different builds, making it easy to try out multiple configurations.

≫ Tool:PC Parts Estimation & Compatibility Check Tool

ZisaLog: Beginner’s Guide to Building a Custom PC

ZisaLog: Beginner’s Guide to Building a Custom PC